by Richard Stirling

In November 2020, the most senior nuclear scientist in Iran was assassinated, with Iranian officials attributing the killing to Israel. Iran claimed that Mohsen Fakhrizadeh was shot by a gun operated remotely. Apparently, facial recognition technology targeted him but left his wife untouched.

It’s difficult to know how much of this is true. Did an algorithm really automatically open fire having identified Fakhrizadeh? Was a human behind the controls with facial recognition software present to assist target identification? Or was the whole story exaggerated to make Israel seem malign and all-powerful? Whatever the answer, it didn’t stop The Times running a headline claiming that ‘the era of AI assassinations have arrived’.

Despite its suspiciousness and extremity, the case neatly captures the inherent ‘creepiness’ of the idea of AI decision-making. Automated decision-making across sectors and at all levels of government is something that we find instinctively worrying, and it’s not at all clear that we have a good ethical framework for understanding and justifying it.

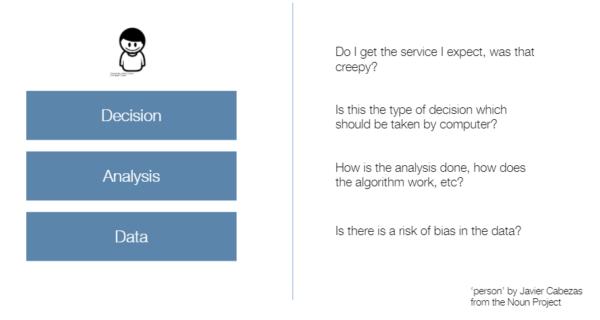

A government seeking to tackle the ethical issues raised by AI and automated decisions needs to consider the problem at different levels.

As with so many other problems the first step is to ask yourself: what does the person expect – are you about to do something creepy? In this case government is here to protect the needs of the citizen and make sure that AI use respects societal expectations. This is an answer that will vary from country to country and will probably have exceptions.

The next question is to ask whether the decision is one that ought to be able taken by computer. Are there are some decisions that are so important that they ought to be taken by people? Many people argue that AI shouldn’t be able to take kill decisions. Factors to consider are the impact on people’s lives and liberty, and the false positive/false negative rates.

Next is to consider how the analysis is done. Some decisions should be transparent – how they are taken able to be challenged e.g. decisions on health interventions. Others it is enough to know the inputs e.g. prioritisation and triage of a caseload. While techniques like neural nets can be computationally advantageous then they are (currently) opaque. When rolling out an AI a government needs to be careful to make sure it uses a system that provides appropriate transparency and accountability to the public.

The final level is the data – data can contain bias in how it is collected, or even bias in what is collected. We recommend that governments use tools like the data ethics canvas to make sure they have taken these considerations into account.

Ethics is a complex field and this is just one way to think through the issues. Get in touch if you would like to discuss things further.